Texts = for document in documents ]ĭictionary = gensim.

Topic_dist = gamma / sum ( gamma ) # normalize distributionĭocuments = import pyLDAvis.gensim pyLDAvis.enablenotebook() vis (ldamodel, corpus, dictionaryldamodel.id2word) vis.

Get plain text topics from gensim lda how to#

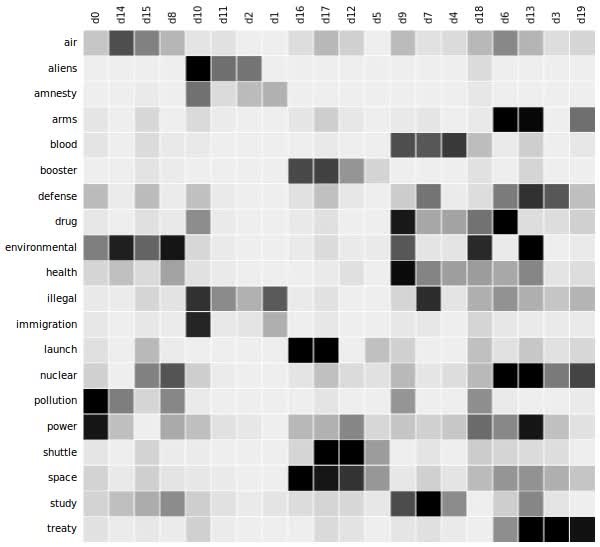

The problem is determining how to extract high-quality themes that are distinct, distinct, and significant. The Latent Dirichlet Allocation (LDA) technique is a common topic modeling algorithm that has great implementations in Python’s Gensim package. Below is the implementation for LdaModel(). Topic Identification is a method for identifying hidden subjects in enormous amounts of text. basicConfig ( filename = "logfile", format = '%(message)s', level = logging. Finally, pyLDAVis is the most commonly used and a nice way to visualise the information contained in a topic model. However, the results themselves should be similar if the trained model is good. It has no functionality for remembering what the documents it's seen in the past are made up of. There may be even more speedups that can be achieved, however that is more difficult for me to test at the moment.Logging. Each time you call getdocumenttopics, it will infer that given document's topic distribution again. Return sorted(doc, key=lambda x: x, reverse=True) If you are aiming to get the main terms in a specific topic, use gettopicterms: from import LdaModel K 10 lda LdaModel (somecorpus, numtopicsK) lda.gettopicterms (5, topn10) Or for all topics for i in range (K): lda.gettopicterms (i, topn10) You can. For smaller lists this speedup will become even greater (~10x speedup for lists of size 1 or 2). From the documentation, you can use two methods for this. I have, however, written another function that does the same but takes a little more than 3 us, so almost 3x as fast. Timing your get_max function on the largest list you provided gives a time of a little over 9 us which seems quite efficient. So this would yield a DxT matrix, where D is the number of documents, and T is the number of topics. If possible, a secondary output that would be nice to have is the document-topic matrix, such that each row corresponds to a document in my data frame, and each column represents the probability (or similarity) of the document to the topic.

But since gensim has already done all the computations, I keep thinking that that 45 seconds of computational time is simply spent on reorganizing data, so there must be a more efficient way of doing this.

Get plain text topics from gensim lda code#

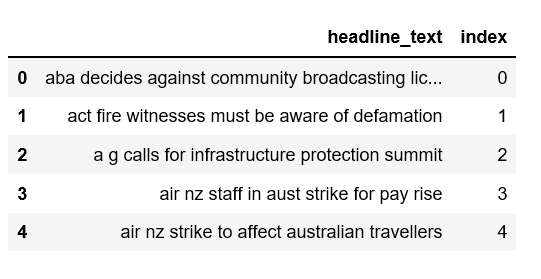

I have around 80k documents in my data frame, so this code takes about 45 seconds to execute. The way I'm doing this now is using a loop: import numpy as npĭata = The primary output that I would like to see is simply this most likely topic for each document. It got patented in 1988 by Scott Deerwester, Susan Dumais, George Furnas, Richard Harshman, Thomas Landaur, Karen Lochbaum, and Lynn Streeter. It is also called Latent Semantic Analysis (LSA). This means that the most likely topic for document_1 is 7, for document_2 is 19, and for document_3 is 4. The topic modeling algorithms that was first implemented in Gensim with Latent Dirichlet Allocation (LDA) is Latent Semantic Indexing (LSI). For example, when I am working with 20 topics, I might get the following for the first three documents in my data frame:

Once the model is built, I can call model.get_document_topics(model_corpus) to get a list of list of tuples showing the topic distribution for each document. I am using gensim LDA to build a topic model for a bunch of documents that I have stored in a pandas data frame.

0 kommentar(er)

0 kommentar(er)